Google, OpenAI Back Nonprofit To Foster Online Child Safety

- by Laurie Sullivan @lauriesullivan, February 10, 2025

Google, OpenAI and children’s gaming platform Roblox are among the firms backing the nonprofit initiative to provide free online safety tools to firms worldwide.

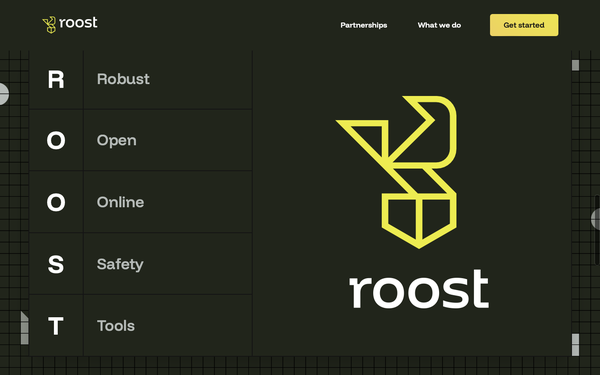

The announcement -- made at the Paris AI summit on Monday -- launches Robust Open

Online Safety Tools (ROOST) focused on open source for the era of artificial intelligence (AI).

"Robust Open Online Safety Tools, known as ROOST, addresses a critical need to

accelerate innovation in online child safety and AI by providing small companies and nonprofits access to technologies they currently lack,” Eric Schmidt, founding partner of ROOST, said in a

statement.

The group will start with a platform focused on child protection as a collaborative, open-source project to foster innovation and make essential infrastructure more transparent, and accessible.

advertisement

advertisement

ROOST has backing from many top tech companies and philanthropies that want to ensure success, Mozilla President Mark Surman, and Mozilla AI Strategy Leed Ayah Bdeir wrote in a blog post.

“This is critical to building accessible, scalable and resilient safety infrastructure all of us need for the AI era,” the two wrote, adding that its support of ROOST is part of a bigger investment in open source AI and safety.

Mozilla, Microsoft, and Bluesky are among the partners supporting the project.

Child exploitation reports rose 12% from 2022 to 2023, most recent data from the National Center for Missing and Exploited Children found. Reports involving video-game company Roblox increased 348% and chat app Discord increased 100% in the period, the data showed.

In 2023, the CyberTipline received 4,700 reports of Child Sexual Abuse Material or other sexually exploitative content related to generative AI.

That same year, the CyberTipline received more than 186,000 reports regarding online

enticement, up more than 300% from 2021. Online enticement is a form of exploitation involving an individual who communicates online with someone believed to be a child with the intent to

commit a sexual offense or abduction.

Last year Meta Platforms, OpenAI, and Google agreed to incorporate safety measures to protect children from exploitation and plug several holes in

their practices, as AI supercharges the ability to create sexualized images and other exploitative material like fake nudes of real students at Westfield High School, a New

Jersey high school.